My first steps in natural language processing: converting a sentence to a conceptual graph

Abstract

The article describes my small experiment: extracting information as a conceptual graph from a phrase in natural language.

This will allow me to manage the game character by voice or text messages.

The obtained results are a good basis for me for achieving some practical results in the future. But they are not yet suitable for practical using right now.

Background

During the increased interest to AI in the society, I also wanted to try to do something in this direction. Using it in games seemed as evident to me. I have gotten an idea: to make a simple scene in Unity 3d by standard assets and try to write a simple AI for the scene. The examples of game logic (which i saw) were pretty much spaghetti code in C#. These rules in that code was a very simple and far from intelligence.

On the one hand, it is understandable: players want a lot of stupid "meat" for destroying it. And in this case a game does not require an expensive and high-performance device.

At the same time, it disappointed me: where are the achievements in the area of AI. In addition, in real games, the dullness of the characters is compensated by an excellent art. I am only able to program. And I do not have many opportunities for getting a such art. I have an interest in voice control of the character. Also it seemed to me as interesting to use documents in the natural language at economic and political simulators.

I quickly made a choice for a symbolic approach and for a system which is based on explicit rules. It allows to clearly define a behavior of a character and to explain why this or that action was performed.

The feature that seemed me as very difficult and as very interesting: to convert a text in natural language to a machine-readable format. It is still difficult for me, because. But now I have an expandable basis.

I was looking for a ready-made solution, because writing such solution is complex and also it takes a lot of time. I did not find a solution which suited for me. But controlling by natural language is a cool feature. I also have bought a book which briefly describes main stages of natural language processing. So I decided to try to convert at least one phrase by myself. My way for it and the result are described here.

Formulation of my task

I want to convert the sentence "I know the dog" to a conceptual graph. This task seems to me as small and it may be solved in a short time. This is only one phrase which contains only four words.

Making a phrase tree of target sentence

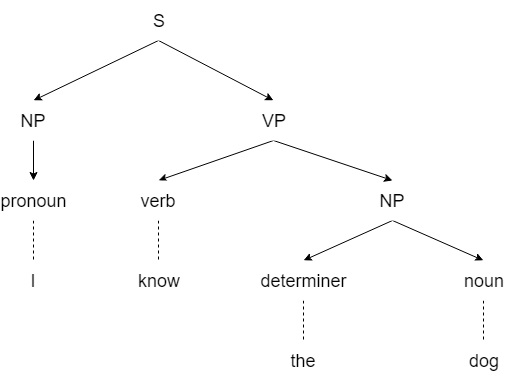

Firstly, I must make a phrase tree of target sentence. The sentence "I know the dog" should be transformed to a tree as in figure 1.

Initially, I planned to use a ready-made solution for this. The solutions for making a phrase tree were mainly based on Java. After some time, I had found a library in C#. She was installed easily and immediately gave me a result. However, this library did not always correctly process the sentences in Present Simple with the subject in the third person. So, the verb "likes" was defined as a noun in the phrase "She likes the dog".

I also planned to use words in the basic form and to label tense and aspect of the sentence as relations of conceptual graph. But I could not extract a basic form of the word by that library.

Also I need to assign a class (which represents some meaning) to the word. It required a separate dictionary and I had to write the dictionary manually.

I needed to look for another library. I did not have any wish to start searching again. So I decided to write a simple syntactic parser manually. I would be very grateful if anyone advise me a good ready-made solution.

At the same time, the previously used library normally divided a paragraph to separate sentences. So I left the library for this.

Firstly, I have written a lexical analyzer and I transformed text to list of tokens.

Then I started to make a phrase tree by a variant of ATN (augmented transition network).

At the moment I have finite-state machines for noun phrase, verb phrase and for sentence in general.

The finite-state machine for noun phrase can only recognize a noun and its determiner. In the future I plan to implement recognition of prepositional phrases, adjectives, numerals and other parts of speech.

The finite-state machine for verb phrase will be specific for each combination of time and aspect. So, I will also extract tense and aspect from parsed sentence. Now I can process present simple and past simple.

The finite-state machine of sentence takes noun phrase of subject and verb phrase and makes phrase tree of the sentence.

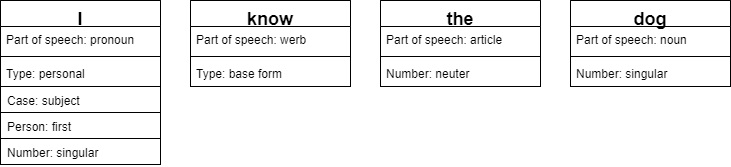

The syntactic parser uses a small dictionary (Figure 2). This dictionary contains basic grammatical information about the word. Also It contains a basic form of the word. Each word can be in relation to different parts of speech or to the same part of speech, but with a different grammatical meaning.

So, there are many variations of using the word in the construction of the phrase tree. In finite stages of parsing we can choose correct variant easily. But in first stages it will difficult. And the correct version will be known only after the successful completion of the parsing.

Therefore, my algorithm tries to make many possible variants of constructing phrase tree of sentence. There will be a lot of such variants at the beginning of the parsing. The amount of possible variants will be significantly reduced by the end of the analysis.

The multivariance code is not very convenient for creating. It will be using a lot of memory. Performance of the code can also suffer. But it will allow to save many efforts by removing predictions from the code. Execution will have visited possible variants and success way will be as the result.

Now the dictionary consists of four words and is defined into the code. This is a necessary and sufficient minimum for the syntactic parsing of the phrase "I know the dog".

In the future, the dictionary will be expanded to the required number of words. The minimum English vocabulary for the non native speaking tourist is about 1000 words. In my opinion it is quite implementable volume of work. Also I plan to make the dictionary stored in a file and extensible.

There are not very much ways of parsing verb phrases. This gives me a hope of building a parser that will be more suitable for practical using.

A ready-made parser can also be used in the future. It would remove the limitation on the number of words and grammatical constructions.

The syntactic parser returns a list of phrase tree whis represent the processed text. Firstly, parsed text can contain two or more sentence. Secondly, a sentence can contain parts which can be interpreted as separate sentences. For example: parts of compound sentences.

Making a conceptual graph

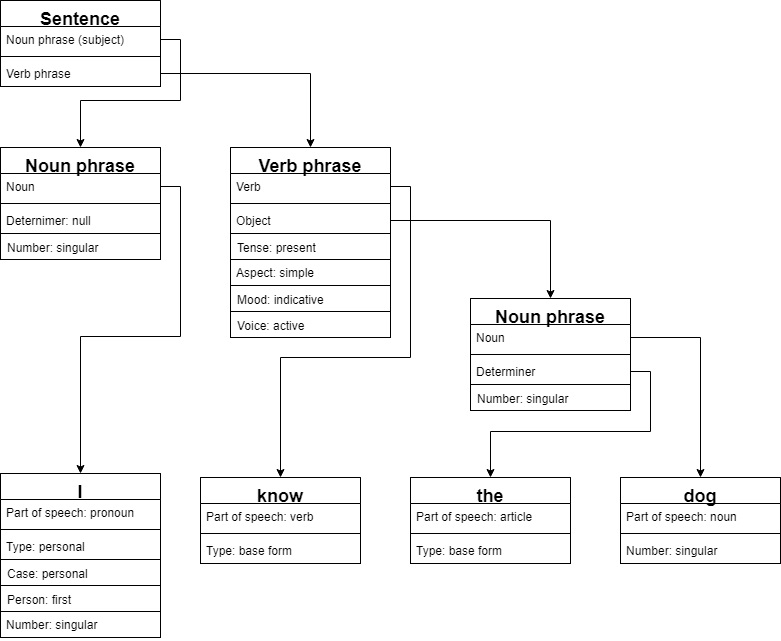

I launch a semantic analyzer for each phrase tree. The semantic analyzer will have visited all nodes of processing phrase tree.

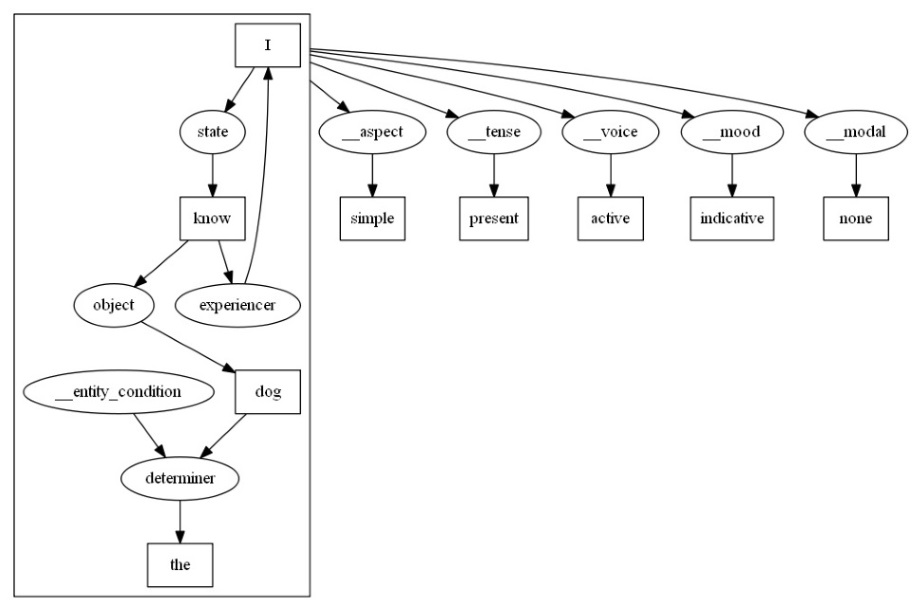

During processing the words "I", "know", "dog" are interpreted as concepts. For these concepts, logical classes are extracted from the dictionary. The logical classes define the meaning of the words in human communication. I can not extract the meaning from grammatical information. These logical classes help me to clarify what meaning does have the concept in the conceptual graph.

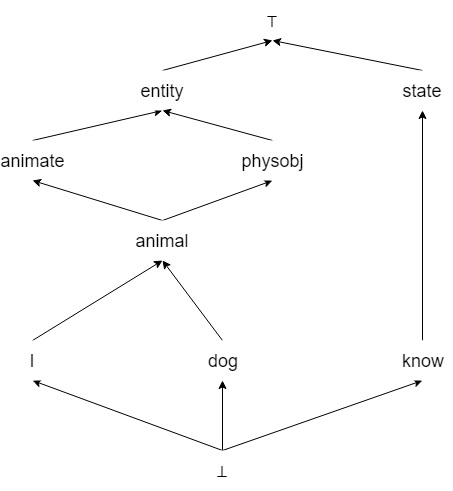

Also, these logical classes can be subclasses of other logical classes. For writing less volume of code, I have specified for the word the logical class that directly relates to it. Logical superclasses are computed by information about inheritance relations. Currently the relations are defined in source code. I took them from an example in the book "Artificial Intelligence: Structures and Strategies for Complex Problem Solving" by George F. Luger. The resulting inheritance diagram is shown in Figure 4.

So the concept "know" is correlated with the logical class "state". The concept "I" is defined as "subject" in the phrase tree. Also, the "I" correlates with the logical class "animate". In the example from the book, the concepts "animate" and "subject" correlate with "state" using the relation "experiencer" (holder of state) (Figure 5). It determines the relationship between the mental state and its holder.

The relation "experiencer" corresponds to the inverse relation "state". It also connects the concepts "I" and "know", but in the opposite direction (Figure. 6).

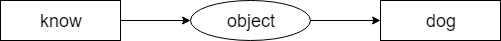

The concept "dog" is defined as "object" in a phrase tree. Also, "dog" is an "entity". "state" and "entity" are related by the relation "object" (Figure 7).

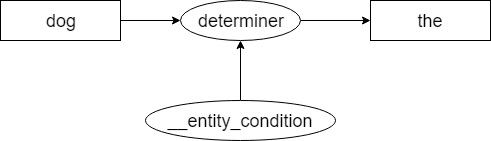

In addition, "dog" has a determiner "the". This means that the description allowing to identify this instance of "dog" was encountered earlier in the text. This knowledge will help me in the processing of this conceptual graph. Therefore, "dog" and "the" are related by the relation "determiner" (Figure 8). The relation "determiner" is marked by the relation "__entity_condition". This relationship will be helpful during converting a conceptual graph to a predicate calculus record.

Aspect and tense are written into the resulting conceptual graph as relations which are relating directly to this graph. I do not like this and I would have written the aspect and tenses as the properties of the graph. I should limit myself to the elements described by John Sowa - concepts and relationships. Otherwise my result will not be a conceptual graph of John Sowa.

Tense is indicated using the relation "__tense". Aspect is indicated using the relation “__aspect”. These relationships can be helpful for building temporal logics.

I will using voice for making a conceptual graph. Therefore, the graphs for the active voice and the corresponding expression in the passive voice must be identical. Now the voice is indicated by the relationship "__voice". Maybe I will be using it in the future.

As a result, my algorithm made such conceptual graph (Figure 9).

Results and plans

So, I have completed my task: I have made a conceptual graph from the phrase "I know the dog".

As a result of the work I have a basis which can be expanded in the future:

- increase the size of the dictionary;

- make as more convenient working with the dictionary;

- add other types of English phrases;

- add processing of prepositional, adjective, numeral phrases;

- and much more.

Another important direction: applying conceptual graphs in a game logic for driving character using natural language.

Ahead I have a lot of interesting work!

References

- George F. Luger Artificial Intelligence: Structures and Strategies for Complex Problem Solving (4th Edition)

- John F. Sowa Conceptual Graphs